How do we go from Breakthrough to Broad Impact with AI?

A look back at 2025 first half and what will it take to democratize AI

Our world is being rapidly reshaped by Artificial Intelligence (AI), a technological transformation that follows a predictable arc. This arc moves through three key phases: an aspirational stage of theoretical ideas, followed by innovation breakthroughs and an exploitative phase where power and access are concentrated, leading finally to a crucial democratization phase, where benefits are broadly shared and development is ethical. AI is largely in the exploitive phase and it is up to us when and how we advance towards democratization. Looking back at 2025 first half we are not moving fast enough.

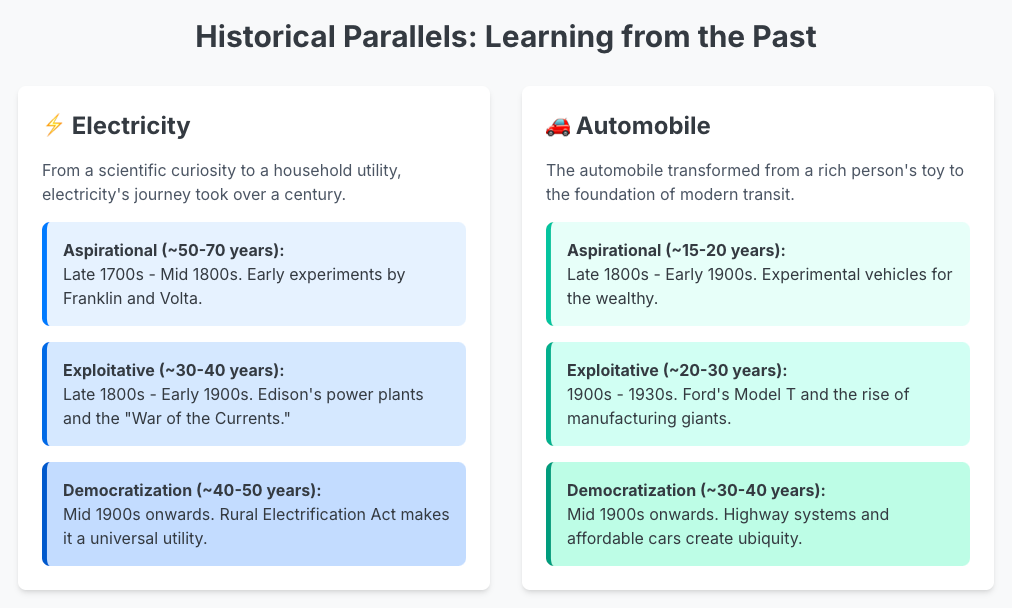

This is a conceptual framework illustrating stages of adoption and societal impact inspired by Technological Revolutions and Techno-Economic Paradigms by Carlota Perez1: Perez describes long cycles of technological revolutions, often characterized by an "installation period" where new technologies emerge followed by financial speculation, often leading to bubbles, followed by a "deployment period" where the technology becomes widely adopted and drives real economic growth, ultimately resulting in a new way of life.

Consider the historical journey of another transformational general purpose technology electricity: Its aspirational beginnings (late 1700s - early 1800s, ~50-70 years) saw scientific curiosity. The exploitative phase (late 1800s - early 1900s, ~30-40 years) emerged with commercial power plants and monopolies, limiting access. Finally, democratization (mid-1900s onwards, ~40-50 years) made it a universal utility through initiatives like the Rural Electrification Act23456.

Similarly, the automobile followed this path. Its aspirational period (late 1800s - early 1900s, ~15-20 years) featured experimental, expensive vehicles7. The exploitative phase (1900s - 1930s, ~20-30 years) took off with mass production and centralized manufacturing, raising concerns about urban impact. Democratization then accelerated (mid-1900s onwards, ~30-40 years) as continuous improvements and infrastructure made cars affordable and ubiquitous, fundamentally reshaping society 89.

AI's aspirational phase, marked by its formal birth in the 1950s (e.g., the 1956 Dartmouth Conference where "Artificial Intelligence" was coined) and initial theoretical pursuits, spanned approximately 50 years until the early 2000s1011. The exploitative phase then gained significant momentum from the early 2000s with the rise of machine learning and big data, dramatically accelerating with the deep learning revolution around 20121213. We are currently ~20-25 years into this exploitative phase, where AI's immense power is heavily concentrated among a few tech giants, leading to pressing concerns about data exploitation, algorithmic bias, resource centralization, knowledge monopolization, and job displacement anxieties14.

There is a prevailing belief that innovation automatically leads to democratization; however, history proves that it can take decades and focused work to get there. Moreover, even recent breakthroughs like the internet have shown that broad technological adoption does not inherently guarantee equitable prosperity15.

Given AI's immense transformative potential is believed to be far exceeding electricity, there is no clear precedent for how long it will take us to move beyond the exploitation phase. The key is that it is not a given that democratization of AI will happen at all unless we choose that path.

Looking back at 2025 first half, innovation and investment is where we made the most progress, however I’d argue that the emerging areas - energy use in data centers, workforce transformation and agent interplay, copyright and IP laws for AI Era, responsible AI practice, and education and learning in the AI era, is where we need a lot more attention and progress in the second half if we want any realistic shot at advancing towards the democratization phase.

2025 First Half Significant Progress:

We saw a booming AI landscape driven by significant innovation and investment:

Advanced AI Models & Capabilities: The frontier continues to expand with next-generation models like OpenAI's GPT-5 (expected mid-to-late 2025), Apple Intelligence, Anthropic's Claude 4 (Opus 4 and Sonnet 4), and Google Gemini, pushing boundaries in multimodal AI, advanced reasoning, and on-device capabilities, all hinting at a future of more sophisticated, integrated AI systems. These advancements in multimodal AI are especially notable, enhancing AI's ability to understand and generate diverse content.

DeepSeek's "Bigger is Better" Disruption: Intriguingly, DeepSeek is challenging the notion that larger AI models are always superior. Their rise suggests that efficiency, novel architectures, or specialized approaches can offer compelling alternatives, potentially enabling smaller players to compete without massive computational resources and aiding AI's democratization16.

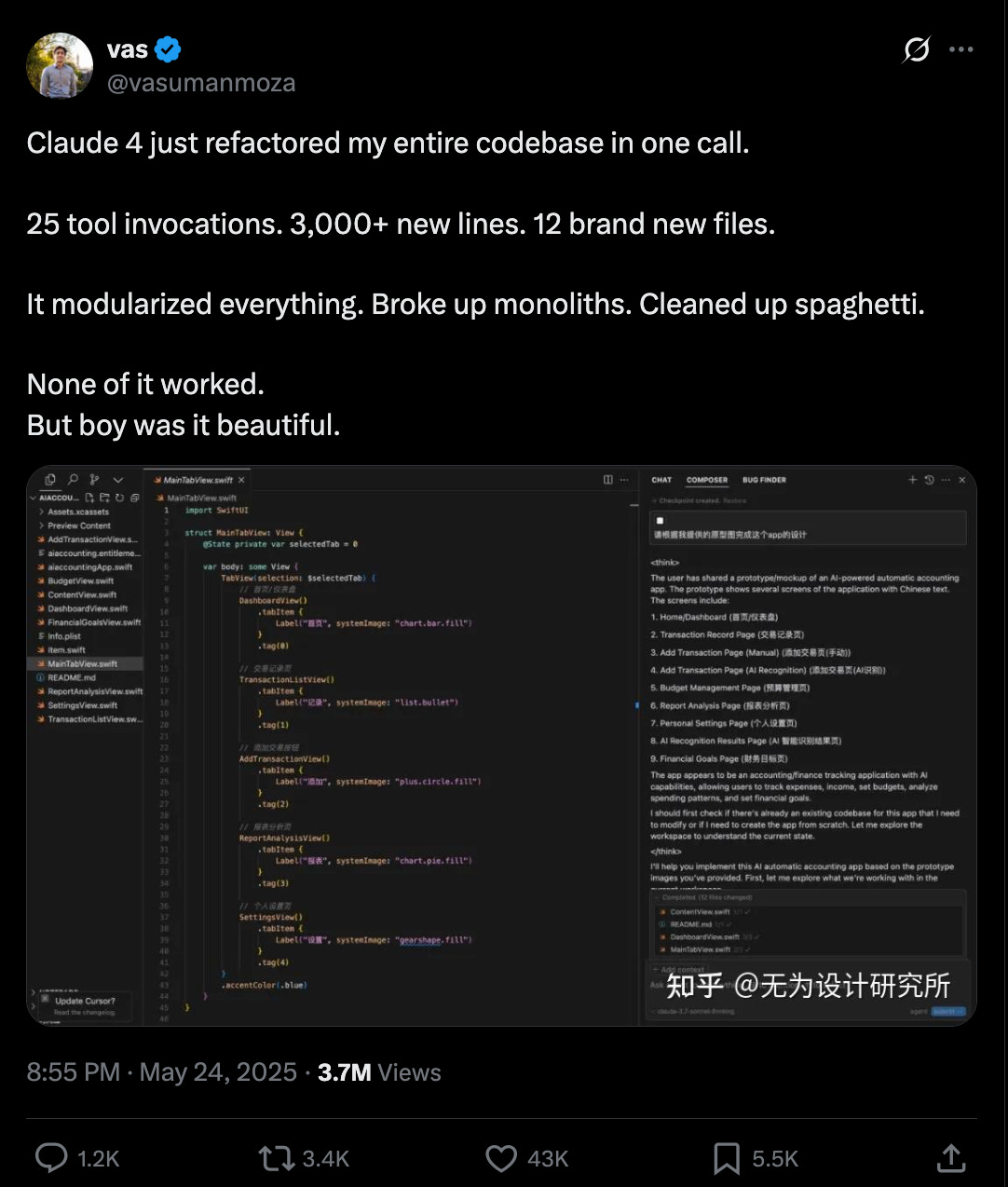

Vibe Coding's Dual Impact: Vibe Coding has revolutionized software development, offering immense speed and flexibility for rapid code generation across languages. However, this blessing introduces the significant curse of testing AI-generated code infused with hallucination, demanding rigorous validation, a challenge already seen with tools like Microsoft's Copilot.

source: X.com

Global AI Ambitions & Diversification: Encouragingly, countries like China, India, the EU, and the Middle East are actively developing their own AI competencies. This global diversification helps balance power, preventing monopolies and pushing towards democratization17. India's National AI Mission (NAIM), for instance, includes the first government-funded multimodal LLM tailored to India's diversity.

Strategic Investment & In-House AI: The industry saw significant strategic maneuvers, including Google's $32 billion acquisition of cloud security firm Wiz, reflecting intense financial speculation and M&A activity rebounding to $71 billion in Q1 202518. Crucially, companies are investing in in-house AI competency, hiring roles like Chief AI Officer, recognizing that neglecting internal AI development could parallel the fate of companies that missed the digital commerce wave.

2025 First Half Emerging Areas:

Despite rapid progress, these emerging areas demands active engagement and focus:

Data Centers & Energy Concerns: Soaring investments in AI infrastructure, particularly data centers, bring escalating concerns about their substantial energy usage and environmental footprint, a key characteristic of the exploitative phase's centralized resource consumption.

Workforce Transformation & Agents: Pervasive job loss anxiety remains, with no clear consensus on whether AI agents will displace humans en masse. A 2020 NBER paper on automation's impact, suggests that the effect of automation on employment isn't uniform; it depends on whether it raises or reduces the expertise required for the remaining non-automated tasks. For instance, eliminating inexpert tasks can lead to higher wages but fewer jobs, while eliminating expert tasks might lower wages but increase overall employment by opening roles to less specialized workers19. This nuanced understanding underscores that the future of work is about reshaping human expertise, not just displacement. Given this, companies must prioritize workforce transformation, leveraging existing human expertise and carefully considering where Agentic AI truly works. It's best for repetitive tasks with minimal risks, rather than critical, nuanced decision-making where privacy, confidentiality, and complex judgment are paramount. Recent big tech layoffs further fuel the debate: are these truly instances of AI replacing human work, or a long-overdue correction of decades of hoarding talent?

Copyright and IP Laws: Generative AI has brought urgent copyright issues, highlighted by high-profile cases like Disney and Universal suing Midjourney20, and authors suing Meta and Anthropic for using copyrighted works in training. A significant development in the first half of 2025 came with a recent federal court ruling concerning Anthropic, which held that training AI models on lawfully acquired copyrighted works constitutes "fair use", due to its "transformative" nature. However, the ruling also clarified that the acquisition and storage of pirated works to build training libraries is not protected by fair use, meaning Anthropic (and potentially others) still face trials over damages for using illegally obtained content21. This complex decision, while offering some clarity for AI training processes, underscores the urgent need for comprehensive legal frameworks around intellectual property in the age of AI.

Education & AI Literacy: AI's integration into education presents a polarizing challenge. The debate over whether students should be allowed to use Large Language Models (LLMs)—viewed by some as cheating and others as a new learning tool—highlights a fundamental shift. Irrespective of this debate, schools and institutions are increasingly leaning towards mandating AI education for all, recognizing the critical need to prepare future generations for an AI-powered world.

Demystifying the AI Black Box: A growing chorus of critical voices, including authors like Karen Hao ('Empire of AI')22, Emily M. Bender and Alex Hanna ('AI Con')23, Arvind Narayanan and Sayash Kapoor ('AI Snake Oil')24, and figures like Gary Marcus25, and an increasing number of AI researchers and practitioners are actively busting AGI hype and challenging the 'scale at all costs' mentality prevalent in Silicon Valley. A significant reality check came from an "Apple paper" confirming LLMs cannot truly reason or think, grounding expectations while underscoring current limitations26.

Towards a Democratized AI Future

As this general purpose technology continues its rapid ascent, both the industry and society at large are grappling with its profound implications. The urgent need to shift from an exploitative phase towards a democratized AI future is clearer than ever. Question is how will we make 2025 a defining year in shaping AI's trajectory for years to come?

Here are four concrete areas - by fostering open access and open-source models, establishing robust ethical governance frameworks, promoting AI literacy, and encouraging decentralized AI development, we can mitigate data exploitation and knowledge silos, combat algorithmic bias, ensure accountability, and guide workforce transformation effectively.

In this context, companies and those in positions of leadership have a significant opportunity to redefine organizational structures and create new jobs focused specifically on these gap areas. For instance, roles in:

AI Governance and Ethics: Positions like AI Ethics Officer, AI Risk Manager, AI Compliance Manager, and Responsible AI Specialist are emerging to ensure AI systems are fair, transparent, and accountable.

Workforce Transformation Specialists: Roles dedicated to understanding how AI impacts human expertise, designing new human-AI collaboration workflows, and implementing upskilling and reskilling programs. This includes Human-AI Collaboration Specialists and AI-Powered Business Analysts who interpret AI-generated insights for strategic decisions.

AI Security and Privacy Engineers: As AI-powered cyberattacks rise, there's a critical need for specialists who can secure AI systems, manage data privacy in complex models, and protect against new threats like adversarial AI. Roles such as Privacy Engineer, AI Security Engineer, and AI Risk Analyst are becoming paramount.

AI Education and Literacy Curators: Professionals focused on developing and delivering AI literacy programs for diverse audiences, ensuring a broader understanding of AI's capabilities and limitations.

source: Reddit.com

Workforce transformation demands a proactive approach to prevent AI from simply dictating its impact on businesses and society. This means strategically defining what is best for each domain and leveraging the deep expertise of the existing workforce and business processes. While Agents and Agentic AI are popular terms, it is vital for business domain teams to truly own the decision of where these will work and where they will not.

Where privacy, confidentiality, and nuanced decision-making are critical, companies do not want to give up agency at this very early stage and may chose to keep it at exploration stage. However, where actions are repetitive, work is not differentiated, and security, bias, and privacy risks are minimal, agentic AI could be a valuable part of the workforce equation.

Conclusion:

To collectively advance towards AI democratization, we must proactively engage in the critical areas identified throughout this essay. Literacy is fundamental, as democratization is not possible without our collective progress from basic understanding to fluency and eventually to mastery – much like learning to drive a vehicle. Given that knowledge is currently siloed, it is imperative to seek multiple perspectives and actively follow thinkers and researchers who share their evidence-based findings.

Support and engage with many organizations that are dedicated to making AI safe and challenging the "scale at all costs" mentality. Follow them to hear directly from them on their evidence-based findings. A few notable organizations at the forefront of this effort include the Distributed AI Research Institute (DAIR)27, which focuses on exposing the real harms of AI systems while countering AI hype and advocating for alternative tech futures; AI Now Institute28, dedicated to studying the social implications of artificial intelligence and policy research addressing power concentration in the tech industry; AI203029, a global initiative focused on harnessing AI's transformative power to benefit humanity while minimizing risks and mainstreaming Responsible AI; Stanford HAI30, dedicated to advancing AI research, education, and policy for the benefit of humanity; and Data & Society Research Institute31, which studies the social implications of data-centric technologies, automation, and AI, emphasizing equity and human dignity.

At this stage of AI's maturity, following notable writers and thinkers is also critical, as news headlines and influencers social posts often reveal only a partial story. Prominent voices like Gary Marcus, known for his skeptical and critical perspectives on current AGI promises; Andrew Ng, a leading figure in machine learning education and practical AI applications; Helen Toner, recognized for her work on AI governance and safety; and Melanie Mitchell, who explores the limitations and conceptual foundations of AI are just a few examples.

The larger point is to seek alternative perspectives and be willing to question prevailing viewpoints, recognizing that fragmented knowledge and a lack of governance on standards and ethics can make it harder to discern fact-based truth from hype.

Thank you so much for reading my mid-year essay, I welcome your thoughts and reactions, feel free to hit reply or share in comments.

About the author: Meghna is an AI executive and strategist with over 25 years developing AI competencies and implementing AI capabilities that have delivered in responsible enterprise AI value at fortune 50 firms. As Co-Founder of Kai Roses Inc., she develops AI solutions and offers consulting services.

She actively champions responsible AI adoption as a Distinguished Fellow with AI2030 and promotes AI literacy and knowledge democratization as a Senior Industry Fellow at UC Irvine Center for Digital Transformation.

Data & Society Research Institute

Disclosure: This essay was developed using google deep research to assist in research, fact finding and reference data gathering.

What I find particularly insidious about this stage of the exploitation cycle is the FOMO/HYPE machine of 3rd party tool makers - that the true value and human effort necessary to make good tools work is lost in the noise. Smart colleagues feel at once hopelessly behind and emboldened with wild expectations on what can be achieved with little strategy.

To many on the inside of AI's growth depend on those wild expectations of users to shore up the length of the exploitation cycle. I am excited for the long list of resources you point to for teams working on democratizing AI.

The technical community and everyone else have two greatly different perspectives on AI. The former sees utility in narrow and constrained use of the "general purpose" LLM technologies across a number of well-defined use cases. The latter - engages in a cargo cult. Majority of adverse outcomes are coming from this cargo cult mentality.